Interactive visualization, WebVR, generative video and sound, machine learning

Project Description

“The network” has become a defining concept of our epoch. — Wendy Chun

In Plantfluencer Gazing, I explore how we might form symbiotic relationships with evolving patterns of digital information, while questioning whether decentralization is truly possible within existing infrastructures. The project began during the COVID-19 lockdowns, a time when many of us became hyper-attuned to our domestic spaces. I was fascinated by how houseplants—especially species like Monstera Deliciosa—transformed into aesthetic tokens of idealized lifestyles on Instagram.

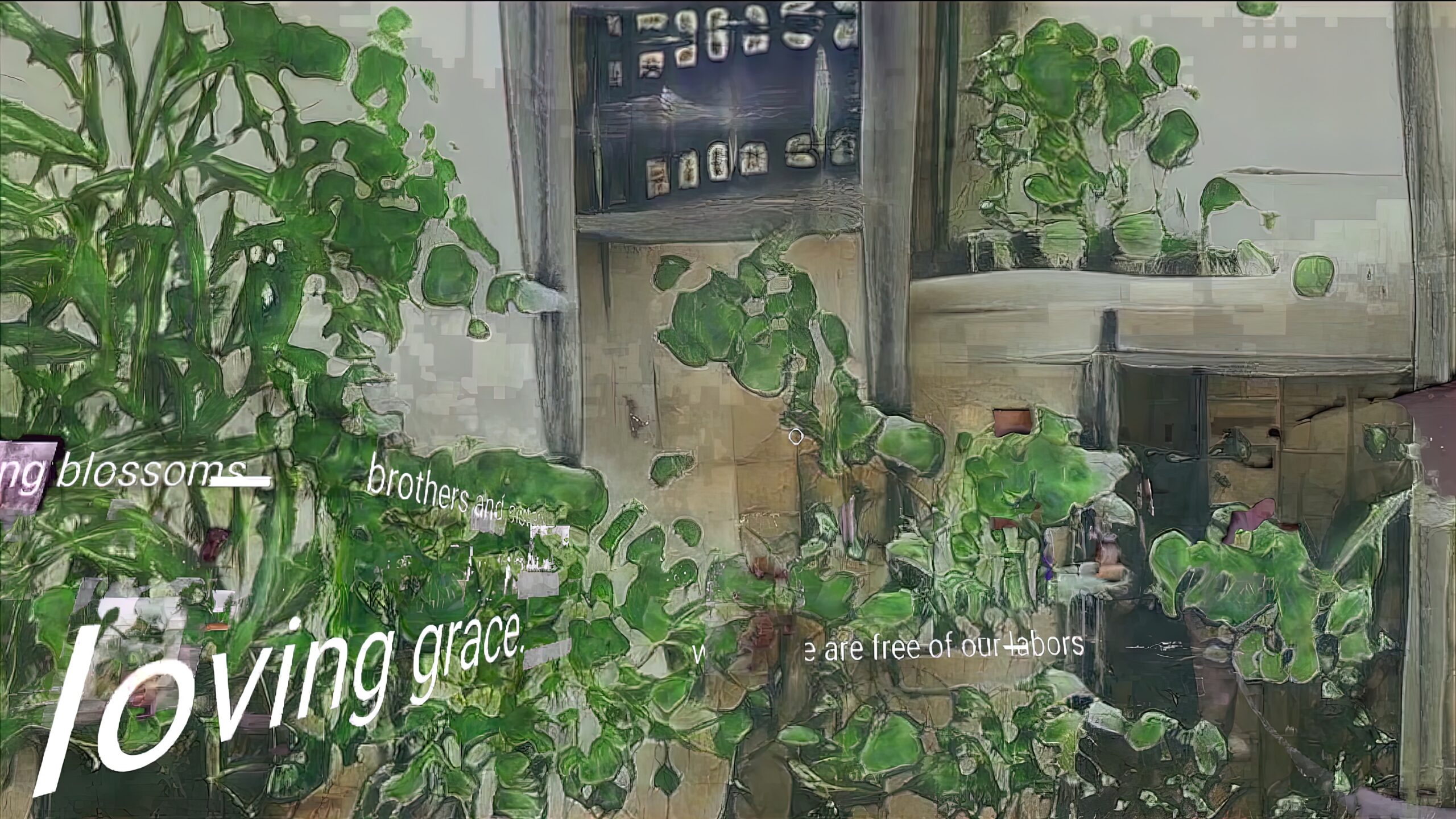

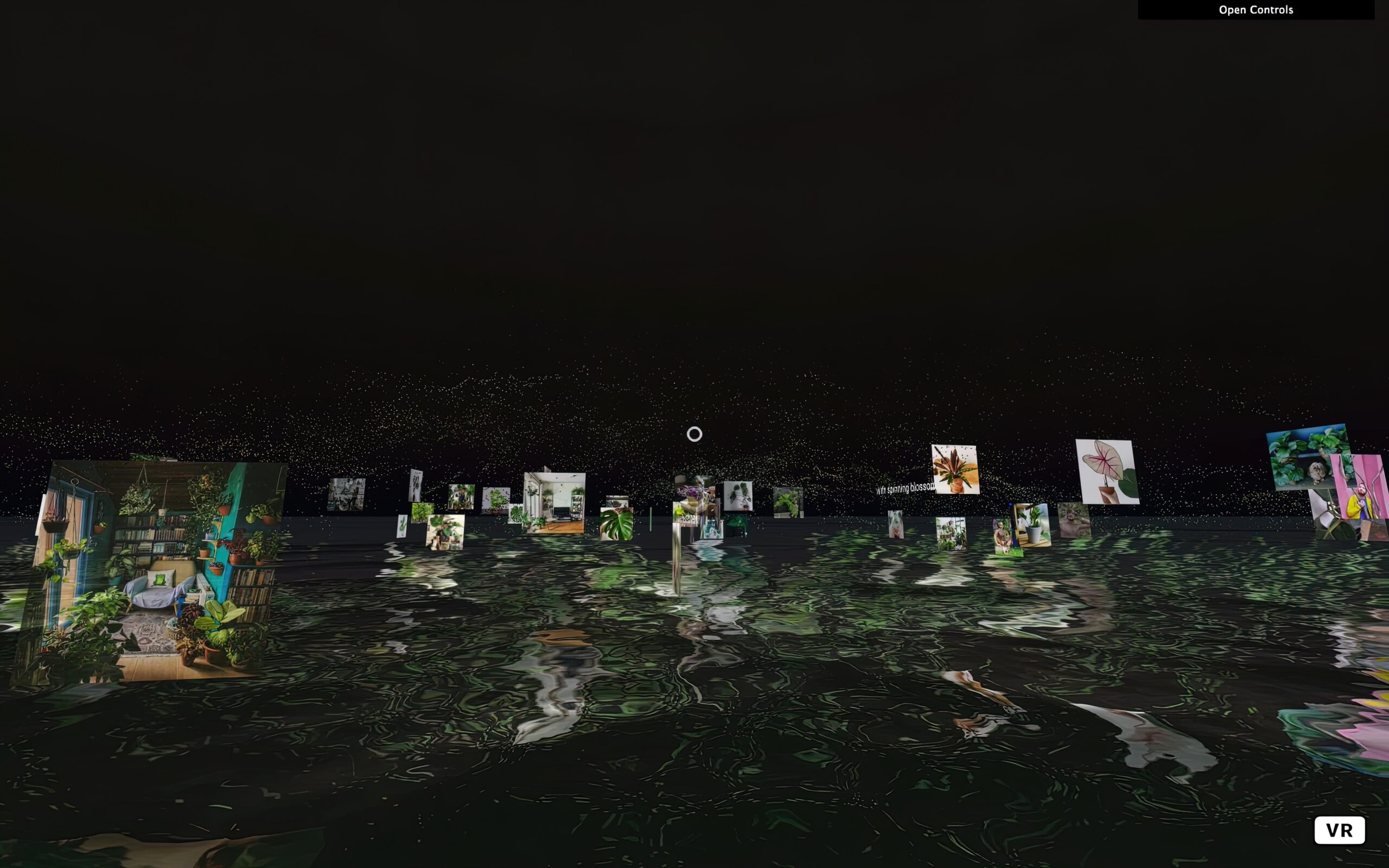

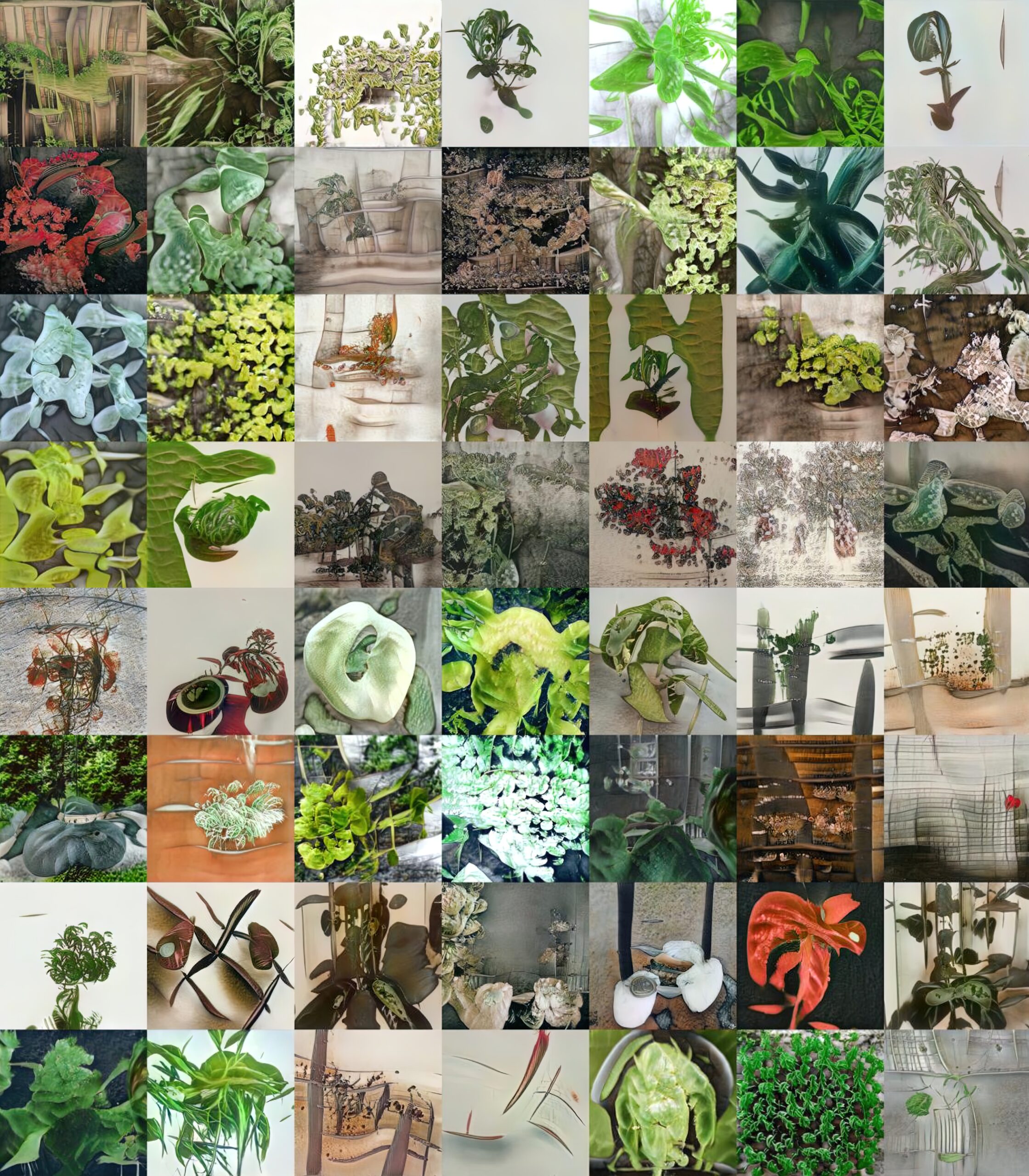

Using custom web crawlers, I collected popular hashtags related to these “plantfluencer” trends and trained machine learning models on the aggregated data. These models generated latent video representations of algorithmically curated plant scenes—images not captured by a camera, but synthesized through the logic of networked attention. To deepen the multisensory experience, I integrated WebVR and custom sonification tools to translate visual patterns into spatialized sound.

By combining immersive media with social media aesthetics, the project constructs a hybrid environment where virtual and physical, public and private, digital and analog layers intersect. I see this as a way to make visible the invisible flows of desire, normativity, and data-driven recommendation systems.

As we scroll through news feeds on ubiquitous screens, we not only see others—we also project ourselves. This feedback loop, often driven by homophily (“similarity breeds connection”), reflects how platforms flatten complex behaviors into predictable trends. Through Plantfluencer Gazing, I wanted to question what it means to be seen—by others, by algorithms, and by ourselves—within a mediated network of affect and representation.

計劃介紹

《綠植網紅的凝視》探討了如何與由訊息模式構成的演化實體建立起共生關係,並質疑在現有基礎設施中,去中心化是否只能是種幻覺。

藝術家透過爬蟲演算法收集疫情中風行的 Ins 植物標籤,並以機器學習模型進行訓練,生成最適合於社交媒體上傳播的綠植景觀,進一步探索連接性和集體性。此外,透過 WebVR 和聲響化程式,創造了一個混合環境,將物理和虛擬實體、公私領域、數位和類比體驗聯繫起來。

當我們在無孔不入的螢幕上滾動瀏覽時,我們同時看見他人和自己,創造了「相似滋生聯繫」的想像網絡。在大數據中不存在意外和口誤,每一行動都揭示出一個更大的無意識模式。此作品揭示了人、非人和技術物如何在通過算法的凝視而建立的扁平化本體中共構彼此。

Screenshot of a WebVR experience viewed on a laptop browser.

Demo video of the WebVR experience.

Video Documentation from 2023 Flare International New Media Arts Festival, Metro City, Shanghai, China.

Latent space image generated from GAN machine learning training.

Exhibited at

- 2023 Flare International New Media Arts Festival, Metro City, Shanghai, China

- 2021 -AND-, curated by Christof Migone, 12-hour online performance, co-presented by Arraymusic & partners, Toronto / Online, Canada

- 2021 Art for the Future Biennale, The Multimedia Art Museum, Moscow, Russia

- 2021, Stranger Senses, Ars Electronica Festival – PostSensorium Garden Program, Online / Austria